A highly anticipated event at Luminous are our quarterly hack day events. Spread over two days it’s a chance for the team to explore emerging technologies that could potentially add value to the Luminous Platform in the future.

This one was more significant than usual as it was the first one post Covid and the first time some of the team had even met in person.

For the event 5 different teams were created each with a specific technology stream to investigate. These covered topics such as live streaming, recording and replaying VR sessions, 3D model interaction methods, hand and gesture inputs in VR, an investigation into how the Quest 2 pass through camera could be used in applications and a look at how to create more life like VR avatars and 3D models.

With topics in hand the team split into various offices around the Toffee Factory and the hack commenced!

Up first was team Huminous and an exploration of 3D Scanning and reality capture for XR avatars.

There aim was the following:

- To create a full life-like human with full natural body motion, rendered in real time within a Unity VR application

- Involve methods of photogrammetry and scanning to create an optimised mesh, UV and bake, super quick

- Test out solutions to rig / skin the mesh in Maya, Max, and Maximo

- Once in Unity link up with the MOCAP Rokoko suit

They started this by first trying out a range of methods for scanning people. This included our long range Navvis scanner, iphones and photogrammetry packages like Agisoft and Epics Capturing Reality software. Capturing people is challenging as any movement is translated into noise in the model. To do this properly a full photogrammetry rig or specialist body scanner is required.

Even so the team persevered and got some pretty good results for a days hack.

Using Epics reality capture yielded the best results with approx. 400 images taken with an iphone.

Capturing Reality does a fantastic job of registering all these images together to create a detailed 3D mesh and point cloud. One downside of photogrammetry is that the underlying mesh is very noisy and needs a considerable amount of sculpting and clean up to get nice results.

Once the mesh was cleaned and optimised it was rendered in Epics new Meta Human package.

https://youtu.be/uq14bPZ_eG8

Team three investigated methods to record and playback VR sessions in real-time in 3D. This is a fairly well document feature in normal games, however there are a number of complications when applied to VR.

The solution would need to do the following:

- Record all object movements and user interactions from a VR Session as state based data

- Re-run a recorded session either in Headset or on Desktop but from any camera position

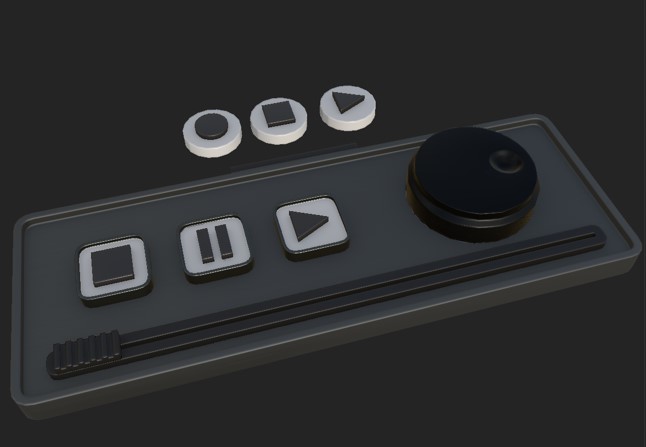

- Support full set of play-back controls – Pause, Rewind, Slow Down / Speed Up, Skip to any point.

- Ideally Replay launched from user’s Session Record in Portal

- An alternative to streaming from a third person camera and saving as video recordings

The self-proclaimed ‘ I don’t know Jeff’ team opted to investigate hand and gesture interactions using the Quest 2 headsets.

Their goal was to explore & research intuitive, immersive hand tracking & gesture interactions to increase immersion and improve user experience for VR applications.

They wanted to replicate real world situations and interactions more accurately and to explore the complexities of implementing these interactions in future projects. Check out the video to the right to see the results!

https://youtu.be/aUjf82XNcN0

https://youtu.be/_957IgBG9eE

The next team decided to look at how they could enhance an existing project that trains a user how to assemble a gate valve using holographic overlays and AI on the HoloLens 2 headsets.

The team wanted to make the experience more visual and tactile by allowing the component parts of the valve to be exploded and then using an x ray style scrubber cut away and view the internal workings.

They also aimed to implement drop zones so that the user can remove parts of the gate valve to learn more about each asset. Key to this would be creating a scrubbable cut-out shader so that the user can see how the gate valve fits together internally without the body or the bonnet occluding the view. The cut-out rotations created where fully interactable as well as providing a cut-out edge colour.

The final team investigated applications for the Quest 2 pass through camera. This is as a safety feature on the device, however developers are now using it to create basic mixed reality applications.

In their application, they used a library called ‘Room Mapper’ to define the boundaries of the VR world space and set up models over the tables in real world.

They used the position information from Room Mapper to define spawner objects that could periodically spawn VR balloons within the boundaries of the room. The aim was to pop the balloons with a virtual staple gun. By creating the table objects, the user can duck and hide behind the real tables as they intercept any shots fired.

Through this exploration they gained significant understanding of the difficulties involved in world space translation for the Quest 2 and how to combine virtual objects with the real world video feed from the pass through camera.

Overall another fantastic event highlighting just how much can be done in a short space of time when the team comes together!

https://youtu.be/tKhvWvupVIw